The following writup will describe a model of consciousness (which I will henceforth refer to as cognition so that the philosophers don't get too uppity) that will be able to recreate its various aspects and (hopefully) be able to be proven at some point by an explicitly constructed example.

I am assuming that the human experience of consciousness (specifically, that of myself) is reasonably representative of an 'average' consciousness and doesn't differ from such in a significant, fundamental way.

In order to be a satisfactory model of cognition, it needs to be able to support the following properties:

- memory formation and recall

- arbitrary manipulation of ideas

- reasonably accurate modelling of the world via idea manipulation

- goal-orientation

- translation of sensory input to ideal representation

- translation of ideas to actions

- metacognition

- counterfactuals

- sense of self

Note that this isn't a minimal list of properties: for example, if, at some point, someone found that their body had been totally hijacked and wasn't responding at all to what they wanted to do, it would be reasonable to say that they were still conscious despite no longer supporting one of the above points.

In fact, I'd say that the only points that are truly necessary are points 1, 2 and 4, but a mind that didn't support any of the rest would be very alien indeed, and thus hard to reason about.

Note: from here on out, I will be referring to ideas as 'symbols'.

The High Level

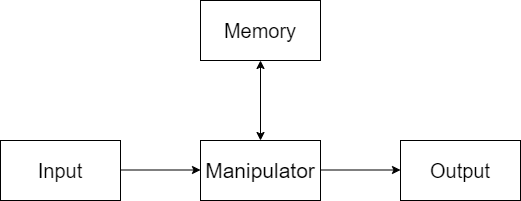

This model describes cognition as being laid out in four broad structures related as such:

Input is, well, sensory input. this structure converts raw sensory information into a symbolic representation.

The manipulator is a structure that handles the manipulation of symbols (really, you can probably subdivide this one a lot, as there is a lot this one does).

Memory is where all symbolic information is stored, either in the short term or longer.

Output takes symbolic information and converts it into a signal that is used to manipulate something outside of the mind.

Here's what a typical cognitive 'cycle' might look like:

- Input translates the current sensory information into a basic symbolic representation and pushes that to a short 'buffer' of sensory information in memory.

- Manipulator reads the symbolic representation provided by input and enhances it with additional symbolic information from memory.

- Manipulator reads from recent symbols in memory and retrieves more symbols from memory that it deems salient.

- Manipulator uses the symbols it currently has access to to construct a new symbol representing a course of action to take; hands it to output.

- Output takes the symbol and converts it into an action.

- Manipulator marks some recent symbols that it deems salient to be stored in longer-term memory.

Note that there is no strict order of execution: all of these can be done in parallel or even asynchronously, so long as they are all done frequently enough.

Some of the more keen-eyed among you may be seeing something of a cartesian theater: after all, how is the manipulator 'determining' anything? Admittedly, I am unsure of the specifics, but I do have a tool that can be used to shed some light onto this: utility.

Utility is, in short, a value (probably associated with a symbol, but there is nothing precluding a purely implicit representation of it) that the manipulator attempts to optimize for. It is necessary for its function.

Why is it necessary?

It's something the manipulator can optimize for; it represents a goal for it. If it didn't have any goal, it would at best manipulate symbols at random and at worst not manipulate symbols at all.

How does this help?

Well, it gives a simple, specific thing (aka: not 'be intelligent lol') as a goal to reason about in determining how it works.

Here is a simple pseudo-algorithm for how the manipulator would determine a course of action given a symbol associated with a utility (which will be referred to as goal):

- FAIL: Split goal into seperate sub-goals and try to solve each of those.

- 1: Find a symbol in memory that is similar to goal and retrieve it and associated symbols.

- if this fails, take a subset of goal and try again

- it it continues to fail, go to FAIL

- 2: Use the retrieved symbols and already 'loaded' symbols to construct a scenario that leads to goal.

- if this fails, retrieve more associated symbols and try again

- it it continues to fail, go to 1 and find a different symbol

- it it still continues to fail, go to FAIL

- 3: Find a symbol in memory similar to scenario that also has outputs associated with it; retrieve it and associated symbols.

- if this fails, take a subset of scenario and try again

- it it continues to fail, go to 2 and construct a different scenario

- it it still continues to fail, go to FAIL

- 4: Alter it until the parts that aren't output match scenario. Hand it to output.

As for how a goal is attained in the first place? That's even easier. One way has been already shown: splitting a goal into sub-goals. Another way is realized when it utterly fails to fulfill a goal: go through memory and retrieve a previous goal that hasn't been fulfilled.

However, that begs the question of what happens when a mind has just been created; when there is nothing yet in memory to derive a goal from. There are a few potential solutions to this:

- pre-populate the mind with a few symbols w/ utility

- give manipulator a process that can generate utility symbols without them already being present

- allow the input structure to make utility symbols upon certain sensations

The Low Level

With all the talk of symbols in the previous model, you may be wondering what, exactly, symbols actually are. Put simply, they are objects that can represent all types of information that a given mind uses.

Lets start with what I'm dubbing as primatives. These are the most basic units of symbolic information, and are, essentially just a value associated with a single type of information.

What primatives may represent may differ between minds, but you can generally expect ones that represent:

- the most basic units of sensory information. For example, in humans, you may expect to see primatives representing redness, greenness, and blueness.

- useful information derived from the above (ex. a primative representing spacial information)

- time

- utility

- various types of output signal. Or at least a sense that is highly correlated with such.

Symbols can also be composed: essentially putting a bunch of symbols into a group and treating the group as a single symbol. Of course, these groups can also be composed, leading to nested groups.

As an example, compose a primative representing redness (and give it a high value) and a symbol representing a single point in space and you get a symbol representing a red point in space. Take a lot of these symbols and compose *them* together and you can get a symbol representing a sphere, or a cube, or any other shape, in red. If you take a lot of varients of *those* symbols and compose each of them with a primative representing time, you can get a shape that arbitrarily moves and/or morphs.

You can also have an empty symbol: a symbol group with no constituent symbols.

~~~

So we have a nice way of storing symbolic information, but how can it actually be used? Well, there are a number of operations manipulator can do in regards to symbols:

- Traversal - It can arbitrarily step down into and up out of any given 'layer' in a symbol.

- Retrieval - It can get symbols from elsewhere.

- Composition - It can take symbols and compose them to form a new symbol.

- Addition - It can take symbols and place them within an existing symbol.

- Deletion - It can remove a symbol and its contents.

- Abstraction - It can remove a symbol's contents but not remove the symbol itself; this preserves structure.

(note: probably not an exhaustive list)

Extrapolating from the Model

Here is an example to show what this model might look like in action:

Imagine you are a floating sphere. You have full 360 degree vision, and you can move in any direction. The room you are in is empty, save two objects (already seperated via basic edge detection) you haven't recognized yet.

Manipulator looks through memory for something matching the larger object first, and finds a very close match with a table. It does the same for the second object, but a conclusive match isn't found; there just aren't enough distinctive features.

The table rotates slightly, and you immediately see a new feature on the second object. Manipulator tries again and gets a match: it's a book.

At that moment, a symbol is seamlessly inserted into your mind. It contains the book resting on the floor. This symbol is composed with a positive utility value.

Manipulator searches through memory for a symbol containing a single book both on the table and on the floor. It fails to find one. It abstracts away the details from the table and floor, searching for a symbol containing a single book both at a higher and lower elevation. It still fails to find one.

It then abstracts away detail about the book and searches for single solid object both at a higher and lower elevation. It finds a match, a ball hitting a block and knocking it off a small cliff, abstracts away those details, and retrieves it.

Manipulator takes the newly retrieved symbol and slots in a symbol representing yourself in the spot where the ball was, the book where the block was, and the table where the top of the cliff was, then further manipulated it so that it matched the conditions of the room.

Manipulator looks through memory for a symbol where you move like in the newly stitched together symbol. It finds one, retrieves the output symbols, and sends them to output.

You then move toward the book, knock into it, and then the book falls off the table and onto the floor.

~~~

Ok, great, so we have a model, but is it really able to support all aspects of cognition? As far as I can tell, yes, and I'll prove it by explaining how it represents those various aspects.

Let's get the ones I listed out in the beginning first.

Memory formation and recall:

An explicit feature of the model. Admittedly, it's less than clear how memory formation and storage would work, as that will probably be very dependent on implementation. Recall would mostly be done via similarity search, though how is, again, implementation dependent.

Arbitrary manipulation of ideas:

You should be able to do basically anything you want with a symbol with the operations already given.

Reasonably accurate modelling of the world via idea manipulation:

Since internal modelling would be done by 'gluing together' the relevent parts of symbols in memory that encode things that actually happened (according to senses), it should be accurate enough.

Goal-orientation:

Explicitly represented via utility.

Translation of sensory input to ideal representation:

Explicitly represented. Specifics are dependent on implementation, obviously.

Translation of ideas to actions:

See above.

Metacognition:

Meaning the ability to think about thoughts. This one's actually non-trivial. If you had a symbol that was already known to be a thought, it would be easy: just abstract away the detail and you'd have a symbol representing thoughts in general. But that's just the problem: you *can't* already know that something is a thought if you don't already know what a thought is.

You *could* argue that a thought would naturally be abstracted away as such at some point, through sheer chance if nothing else, but that feels like enough of a handwave that I'm not willing to rely on it.

So, what seperates thoughts from non-thoughts? Why, by being inside your mind, of course! You might think this just makes the problem worse, but consider this: the only thing that would be considered as 'outside the mind' here would be direct sensory information.

Indeed, the separation between sensory and non-sensory symbols is almost inherent to the model. If sensory symbols are represented at any given moment as all being composed with each other (very reasonable) and that symbol is composed with anything that would clearly demark it as sensory information, you get an easy way to tell that apart from non-sensory information (i.e: the mind and its contents). So thoughts might me most simply represented as 'the symbols that are in the symbol that doesn't represent the external world'.

Counterfactuals:

There is nothing preventing a symbol being created that is counter to the truth, and it should be possible to represent a truth value with a symbol using the internal vs external world distinction (sensory information is treated as true by default).

Sense of self:

Inherent to the internal vs external world distinction.

Now we move on to some more subjective experiences.

Awareness:

Seeing as this is the part of consciousness you actually experience, a way to determine what one is aware of under this model is pretty important. Fortunately, there is a simple way to do so: at any given moment, one is aware of any symbol manipulator is interacting with.

There are two problems with the above method. One, it only allows for a binary aware/unaware, which is not how awareness actually works. Two, it doesn't actually make sense to talk about someone in a single moment in time as being 'aware' at all (you proboably wouldn't consider someone that was put under complete stasis, down to the subatomic level, to be at all aware).

Fortunately, a very simple tweak fixes both of these issues: over a period of time, the frequency a symbol was interacted with by manipulator denotes how aware of that symbol one is.

As an aside, a common model of awareness is that of working memory, a temporary storage where one is aware of the symbols inside of it. While this model supports it, it isn't necessary; all that's needed is for manipulator preferentially interacting with symbols that were recently interacted with, with the limited size of working memory being explained by that being related to the maximum number of symbols manipulator can interact with at any given time.

Change Blindness:

This is explained by manipulator not frequently checking to see if a symbol it is interacting with has changed since it started interacting with it. Do note that the symbol is still updated, as one would never notice at all if it wasn't.

Aphantasia:

Aphantasia is interesting. We know that their (people with aphantasia) minds aren't entirely unable to process visual information, because that would mean that they all were blind (which they aren't). We also know that their minds are storing visual information in memory, since their dreams have visual content (this has some interesting implications on how dreams work as well).

An explanation that fits these criteria is that, for whatever reason, manipulator is excluding visual symbols entirely from recollection.

Earworms:

Have you ever found yourself singing/humming a song without even realizing it? How does that even work (under this model)?

By my reasoning, there are two phenomena that go into this. The first we have already covered: if the symbol representing the song is accessed infrequently enough, you may as well be totally unaware of it.

So the symbol for the song is accessed, and for whatever reason is immediately sent to output. Then, you start singing the song. Then you hear yourself singing the song. At some point while hearing yourself, the noise is recognized as the song, bringing the symbol representing it, which otherwise would have faded back into your subconsciousness, back up for just long enough for the cycle to repeat itself.

Note that this requieres the second phenomenon: the output is able to continue doing its thing without continually being fed instructions.

~~~

There is more I have to say on this subject, but I'm satisfied with what I have down for now.